When teaching introductory mechanics in physics, it is common to teach Newton’s first law of motion (N1) as a special case of the second (N2). In casual classroom lore, N1 addresses the branch of mechanics known as statics (zero acceleration) while N2 addresses dynamics (nonzero acceleration). However, without getting deep into concepts associated with Special and General Relativity, I claim this is not the most natural or effective interpretation of Newton’s first two laws.

N1 is the law of inertia. Historically, it was asserted as a formal launching point for Newton’s other arguments, clarifying misconceptions left over from the time of Aristotle. N1 is a pithy restatement of the principles established by Galileo, principles Newton was keenly aware of. Newton’s original language from the Latin can be translated roughly as “Law I: Every body persists in its state of being at rest or of moving uniformly straight forward, except insofar as it is compelled to change its state by force impressed.” This is attempting to address the question of what “a natural state” of motion is. According to N1, a natural state for an object is not merely being at rest, as Aristotle would have us believe, but rather uniform motion (of which “at rest” is a special case). N1 claims that an object changes its natural state when acted upon by external forces.

N2 then goes on the clarify this point. N2, in Newton’s language as translated from the Latin was stated as “Law II: The alteration of motion is ever proportional to the motive force impress’d; and is made in the direction of the right line in which that force is impress’s.” In modern language, we would say that the net force acting on an object is equal to its mass times its acceleration, or

![]()

In the typical introductory classroom, problems involving N2 would be considered dynamics problems (forgetting about torques for a moment). A net force generates accelerations.

To recover statics, where systems are in equilibrium (again, modulo torques), students and professors of physics frequently then back-substitute from here and say something like: in the case where ![]() clearly we recover N1, which can now be stated something like:

clearly we recover N1, which can now be stated something like:

![]()

if and only if

![]()

This latter assertion certainly looks like the mathematical formulation of Newton’s phrasing of N1. Moreover, it seemed to follow from the logic of N2 so, ergo, “N1 is a special case of N2.”

But this is all a bit too ham-fisted for my tastes. Never mind the nonsensical logic of why someone as brilliant as Newton would start his three laws of motion with special case of the second. That alone should give one pause. Moreover, Newton’s original language of the laws of motion is antiquated and does’t illuminate the important modern understanding very well. Although he was brilliant, we definitely know more physics now than Newton and understand his own laws at a deeper level than he did. For example, we have an appreciation for Electricity and Magnetism, Special Relativity, and General Relativity, all of which force one to clearly articulate Newton’s Laws at every turn, sometimes overthrowing them outright. This has forced physicists over the past 150 yeas to be very careful how the laws are framed and interpreted in modern terms.

So why isn’t N1 really a special case of N2?

I first gained an appreciation for why N1 is not best thought of as a special case of N2 when viewing the famous educational film called Frames of Reference by Hume and Donald Ivey (below), which I use in my Modern Physics classes when setting up relative motion and frames of reference. Then it really hit home later while teaching a course specifically about Special Relativity from a book by the same name by T.M by Helliwell.

A key modern function of N1 is that it defines inertial frames. Although Newton himself never really addresses inertial frames in his work, this modern interpretation is of central importance in modern physics. Without this way of interpreting it, N1 does functionally become a special case of N2 if you treat pseudoforces as actual forces. That is, if “ma” and the frame kinematics are considered forces. In such a world, N1 is redundent and there really are only two laws of motion (N2 and the third law, N3, which we aren’t discussing here). So why don’t we frame Newton’s laws this way. Why have N1 at all? One might be able to get away with this kind of thinking in a civil engineering class, but forces are very specific things in physics and “ma” is not amongst them.

So why is “ma” not a force and why do we care about defining inertial frames?

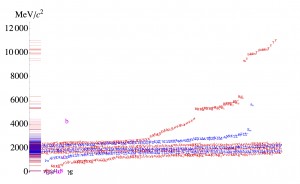

Basically an inertial frame is any frame where the first law is obeyed. This might sound circular, but it isn’t. I’ve heard people use the word “just” in that first point: “an inertial frame is just any frame where the first law is obeyed.” What’s the big deal? To appreciate the nuance a bit, the modern logic of N1 goes something like this:

if

![]()

and

![]()

then you are in an inertial frame.

Note, this is NOT the same as a special case of N2 as stated above in the “if and only if” phrasing

![]()

if and only if

![]()

That is, N1 is a one-way if-statement that provides a clear test for determining if your frame is inertial. The way you do this is you systematically control all the forces acting on an object and balance them, ensuring that the net force is zero. A very important aspect of this is that the catalog of what constitutes a force must be well defined. Anything called a “force” must be linked back to the four fundamental forces of nature and constitute a direct push or a pull by one of those forces. Once you have actively balanced all the forces, getting a net force of zero, you then experimentally determine if the acceleration is zero. If so, you are in an inertial frame. Note, as I’ve stated before, this does not include any fancier extensions of inertial frames having to do with the Principle of Equivalence. For now, just consider the simpler version of N1.

With this modern logic, you can also use modus ponens and assert that if your system is non-inertial, then you have can have either i) accelerations in the presence of apparently balanced forces or ii) apparently uniform motion in the presence of unbalanced forces.

The reason for determining if your frame is inertial or not is that N2, the law that determines the dynamics and statics for new systems you care about, is only valid in inertial frames. The catch is that one must use the same criteria for what constitutes a “force” that was used to test N1. That is, all forces must be linked back to the four fundamental forces of nature and constitute a direct push or a pull by one of those forces.

Let’s say you have determined you are in an inertial frame within the tolerances of your experiments. You can then go on to apply N2 to a variety of problems and assert the full powerful “if and only if” logic between forces and accelerations in the presence of any new forces and accelerations. This now allows you to solve both statics (no acceleration) and dynamics (acceleration not equal to zero) problems in a responsible and systematic way. I assert both statics and dynamics are special cases of N2. If you give up on N1 and treat it merely as a special case of N2 and further insist that statics is all N1, this worldview can be accommodated at a price. In this case, statics and dynamics cannot be clearly distinguished. You haven’t used any metric to determine if your frame is inertial. If you are in a non-inertial frame but insist on using N2, you will be forced to introduce pseudoforces. These are “forces” that cannot be linked back to pushes and pulls associated with the four fundamental forces of nature. Although it can be occasionally useful to use pseudoforces as if they were real forces, they are physically pathological. For example, every inertial frame will agree on all the forces acting on an object, able to link them back to the same fundamental forces, and thus agree on its state of motion. In contrast, every non-inertial frame will generally require a new set of mysterious and often arbitrary pseudoforces to rationalize the motion. Different non-inertial frames won’t agree on the state of motion and won’t generally agree on whether one is doing statics or dynamics! As mentioned, pseudoforces can be used in calculation, but it is most useful to do so when you actually know a priori that you are in a known non-inertial frame but wish to pretend it is inertial for practical reasons (for example, the rotating earth creates small pseudoforces such as the Coriolis force, the centrifugal force, and the transverse force, all byproducts of pretending the rotating earth is inertial when it really isn’t).

Here’s a simple example that illustrates why it is important not to treat N1 as special case of N2. Say Alice places a box on the ground and it doesn’t accelerate; she analyzes the forces in the frame of the box. The long range gravitational force of the earth on the box pulls down and the normal (contact) force of the surface of the ground on the box pushes up. The normal force and the gravitational force must balance since the box is sitting on the ground not accelerating. OR SO SHE THINKS. The setup said “the ground” not “the earth.” “The ground” is a locally flat surface upon which Alice stands and places objects like boxes. “The earth” is a planet and is a source of the long range gravitational field. You cannot be sure that the force you are attributing to gravity really is from a planet pulling you down or not (indeed, the Principle of Equivalence asserts that one cannot tell, but this is not the key to this puzzle).

Alice has not established that N1 is true in her frame and that she is in an inertial frame. This could cause headaches for her later when she tries to launch spacecraft into orbit. Yes, she thinks she knows all the forces at work on the box, but she hasn’t tested her frame. She really just applied backwards logic on N1 as a special case of N2 and assumed she was in an inertial frame because she observed the acceleration to be zero. This may seem like a “difference without a distinction,” as one of my colleagues put it. Yes, Alice can still do calculations as if the box were in static equilibrium and the acceleration was zero — at least in this particular instance at this moment. However, there is a difference that can indeed come back and bite her if she isn’t more careful.

How? Imagine that Alice was, unbeknownst to her or her ilk, on a large rotating (very light) ringworld (assuming ringworlds were stable and have very little gravity of their own). The inhabitants of the ringworld are unaware they are rotating and believe the rest of the universe is rotating around them (for some reason, they can’t see the other side of the ring). This ringworld frame is non-inertial but, as long as Alice sticks to the surface, it feels just like walking around on a planet. For Bob, an inertial observer outside the ringworld (who has tested N1 directly first), there is only once force on the box: the normal force of the ground that pushes the box towards the center of rotation and keeps the box in circular motion. All other inertial observers will agree with this analysis. This is very clearly a case of applying N2 with accelerations for the inertial observer. The box on the ground is a dynamics problem, not a statics problem. For Alice, who believes she is in an inertial frame by taking N1 to be a special case of N2 (having not tested N1!), she assumes there are two forces keeping the box in static equilibrium — it appears like a statics problem. Is this just a harmless attribution error? If it gives the same essential results, what is the harm? Again, in an engineering class for this one particular box under these conditions, perhaps this is good enough to move on. However, from a physics point of view, it introduces potentially very large problems down the road, both practical and philosophical. The philosophical problem is that Alice has attributed a long range force where non existed, turning “ma” into a force of nature, which is isn’t. That is, the gravity experienced by the ringworld observer is “artificial”: no physical long range force is pulling the box “down.” Indeed “down,” as observed by all inertial observers, is actually “out,” away from the ring. Gravity is a pseudoforce in this context. There has been a violation of what constitutes a “force” for physical systems and an unphysical, ad hoc, “force” had to be introduced to rationalize the observation of what appears to be zero local acceleration. Again, let us forgo any discussions of the Equivalence Principle here where gravity and accelerations can be entwined in funny ways.

This still might seem harmless at first. But image that Alice and her team on the ring fire a rocket upwards normal to the ground trying to exit or orbit their “planet” under the assumption that it is a gravitational body that pulls things down. They would find a curious thing. Rockets cannot leave their “planet” by being fired straight up, no matter how fast. The rockets always fall back and hit the ground and, despite being launched straight up with what seems to be only “gravity” acting on it, yet rocket trajectories always bend systematically in one directly and hit the “planet” again. Insisting the box test was a statics problem with N1 as a special case of N2, they have no explanation for the rocket’s behavior except to invent a new weird horizontal force that only acts on the rocket once launched and depends in weird ways on the rocket’s velocity. There does not seem to be any physical agent to this force and it cannot be attributed to the previously known fundamental forces of nature. There are no obvious sources of this force and it simply is present on an empirical level. In this case, it happens to be a Coriolis force. This, again, might seem an innocent attribution error. Who’s to say their mysterious horizontal forces aren’t “fundamental” for them? But it also implies that every non-inertial frame, every type of ringworld or other non-inertial system, one would have a different set of “fundamental forces” and that they are all valid in their own way. This concept is anathema to what physics is about: trying to unify forces rather than catalog many special cases.

In contrast, you and all other inertial observers, recognize the situation instantly: once the rocket leaves the surface and loses contact with the ringworld floor, no forces act on it anymore, so it moves in a straight line, hitting the far side of the ring. The ring has rotated some amount in the mean time. The “dynamics” the ring observers see during the rocket launch is actually a statics (acceleration equals zero) problem! So Alice and her crew have it all backwards. Their statics problem of the box on the ground is really a dynamics problem and their dynamics problem of launching a rocket off their world is really a statics problem! Since they didn’t bother to sysemtically test N1 and determine if they were in an inertial frame, the very notions of “statics” and “dynamics” is all turned around.

So, in short, a modern interpretation of Newton’s Laws of motional asserts that N1 is not a special case of N2. First establishing that N1 is true and that your fame is inertial is critical in establishing how one interprets the physics of a problem.